In the New Era of SDN and NFV and the push towards network programmability and automation, the usual method of deploying new changes on the network and validating the network state after this change will not scale. The way we interact with the network needs to be more dynamic and agile, hence we need to use the same DevOps tools that the sysadmin have been using to manage the infrastructure and scale their operation. In this series of articles, we are going to discuss how to use Ansible and NAPALM to automate network provisioning and validation. we will outline how Ansible can be used to perform the following main tasks.

- Generating router configuration from templates.

- Deploying Configuration on different Network OS and platforms.

- pre and post validation after a network deployment or change.

- Deploying new services (Internet Access or L3VPN) and validating that the service is deployed correctly.

In the first article, we will outline what is Ansible and the role of NAPALM in network automation how they can be combined in order to deliver a simple but powerful network automation for a multi-vendor environment.

What is Ansible?

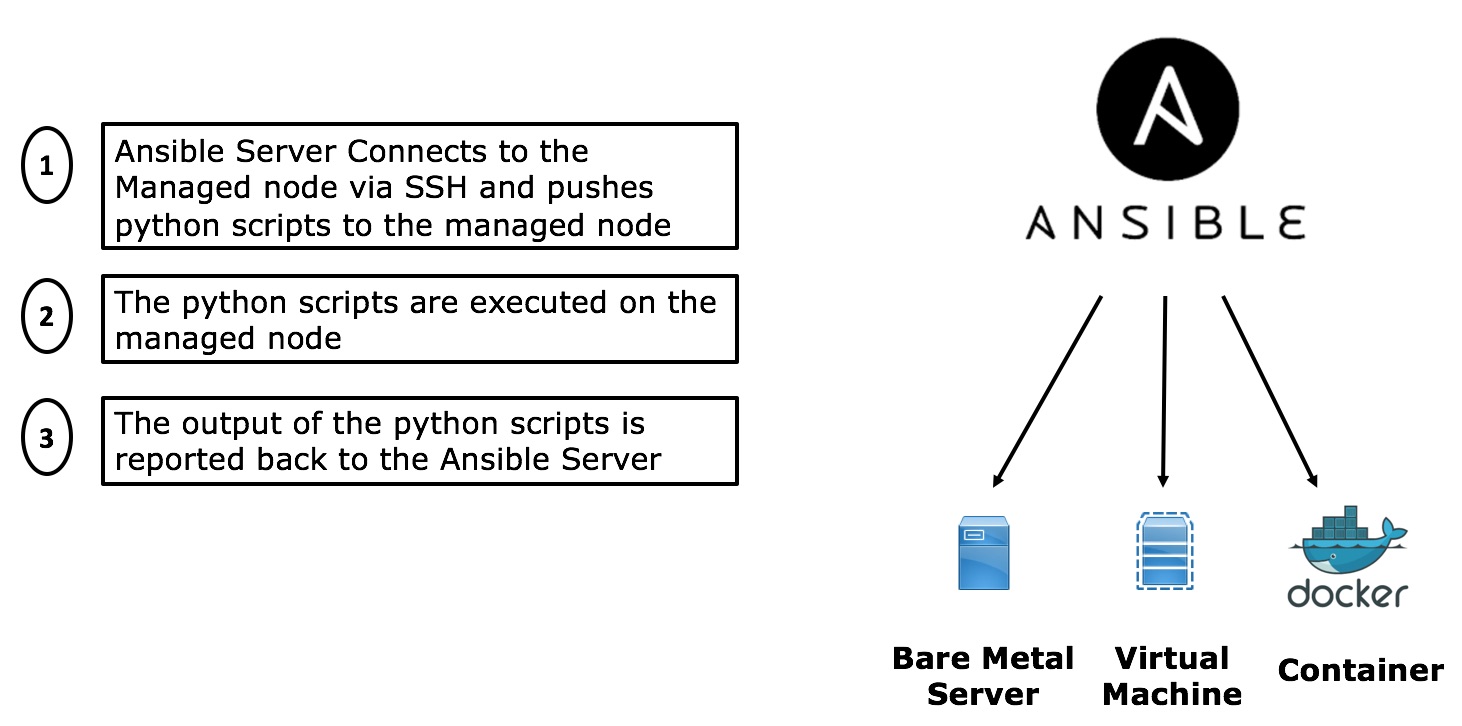

Ansible in its core is an IT Automation tool which is used mainly to configure IT infrastructure (Bare Metal servers or virtual machines and even containers), deploy software on those systems and perform workflow orchestration for the applications running over the infrastructure. It is part of the Continuous Configuration Automation tools like Puppet, Chef, and SaltStack which aims to simplify the management of IT infrastructure and data Centers through an Infrastructure as a code framework by defining the state of the infrastructure in human readable definition files. Ansible is considered a very easy and scalable solution compared to puppet and Chef since it doesn’t rely on any agent (agentless) that should be deployed on the remotely managed system. it uses a PUSH model where the Ansible Server node sends the required changes to the remote system, this is different from the approach used by other tools like Puppet or Chef which utilize an agent that must be installed on the remotely managed device that PULL the changes required from the Master server. The below diagram outlines a high-level setup that is used by Ansible to manage a typical IT infrastructure.

Where is the Network!!

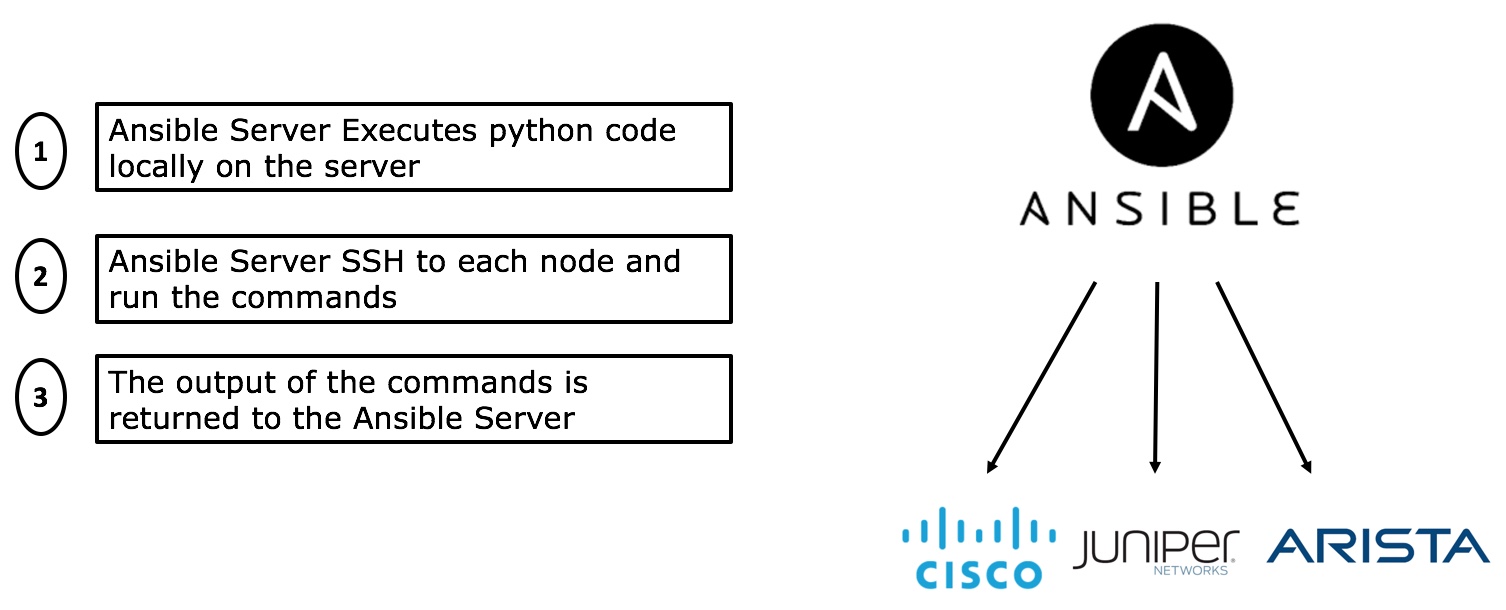

As stated earlier Ansible started as an IT automation framework to manage servers (the operating system and the applications running on these servers), then it started to support Networking hardware (especially DC switches). At the early stages, Ansible was just treating the Network nodes like server and managing them via SSH, so the Ansible server should login into the managed network nodes and run the CLI commands on them and report the output. The below diagram outlines the early stages of Ansible interaction with networking nodes

When you are running Linux as your OS on your DC Switches (Like the case of Cumulus Linux) you can treat your network nodes similar to your servers and execute the same playbooks and workflows to maange them. This is one of the main drivers for adoption of Whitboxes and the use of Linux OS as your Network OS.

This approach has many problems since the output from the devices was unstructured thus Ansible only provides text output from the managed devices and we had to use again screen scraping and regular expressions to transform the output to valid data structures that Ansible can use and work with. Different Ansible modules like ntc-ansible (that uses TextFSM to parse the output) is used to have a more programmatic approach to do the screen scraping, however, this was still very tedious.

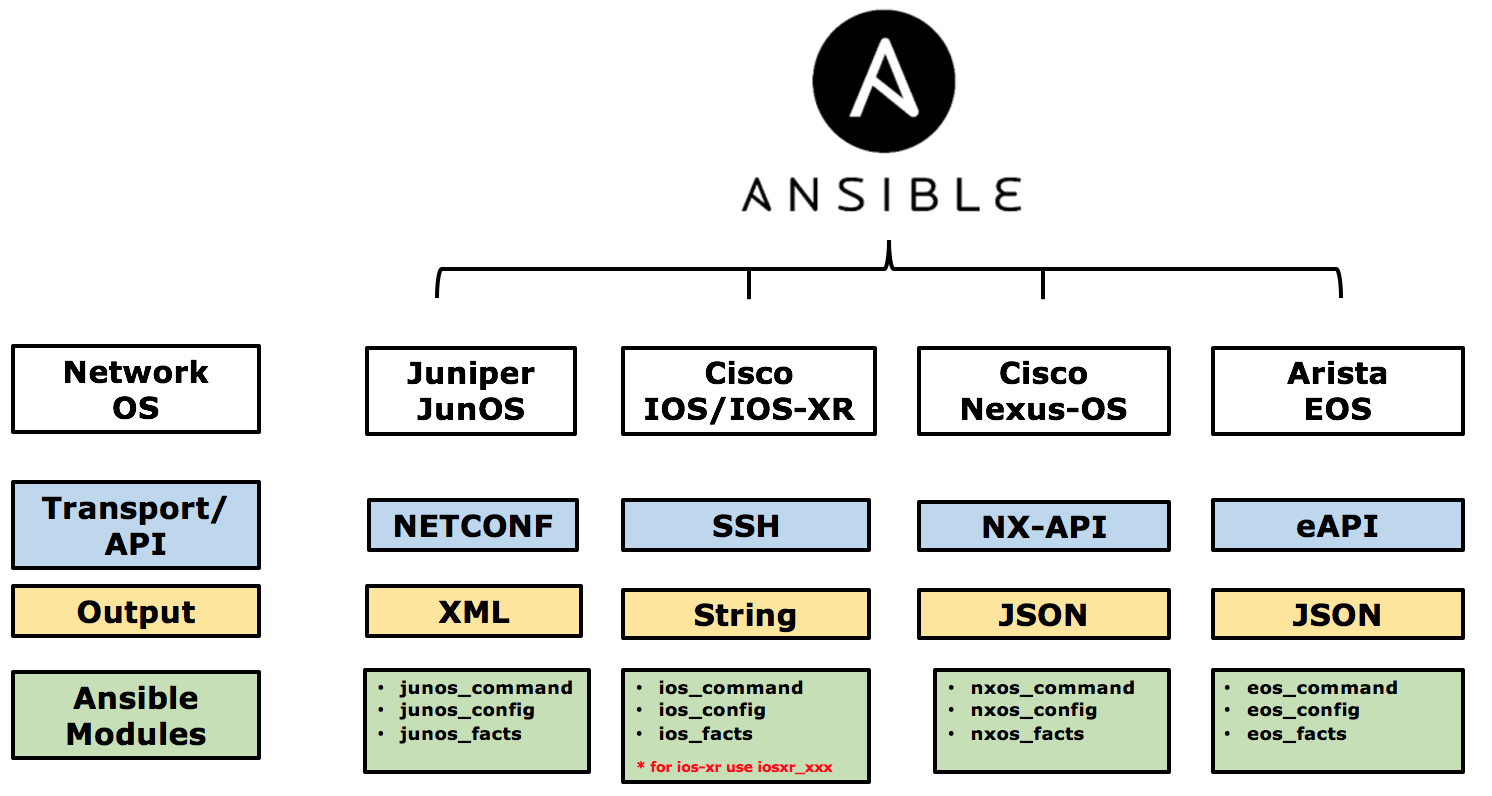

As the networking vendors started to support APIs interfaces for their Networking OS (like NETCONF for JunOS, NXAPI for NX-OS or eAPI for Arista), Ansible started to support these API interfaces to communicate with the networking devices in order to return structured data that can be easily parsed into Ansible. So Ansible would translate XML or JSON data returned as the output from the executed commands into valid Data structures that can then be used by Ansible as facts or variables to control the execution of the playbooks. the below diagram outlines the approach that is used now by Ansible to communicate with networking devices.

Ansible uses a very similar approach to represent a common interface to deal with different networking hardware so it uses the following most common modules to manage networking nodes.

- XXX_facts (ios_facts, junos_facts, iosxr_facts) to gather system level information like serial number, device models from the nodes.

- XXX_command (ios_command, junos_command) to run commands on the managed node and report the output.

- XXX_config (iosxr_config, junos_config) to load configuration files or commands to the managed nodes.

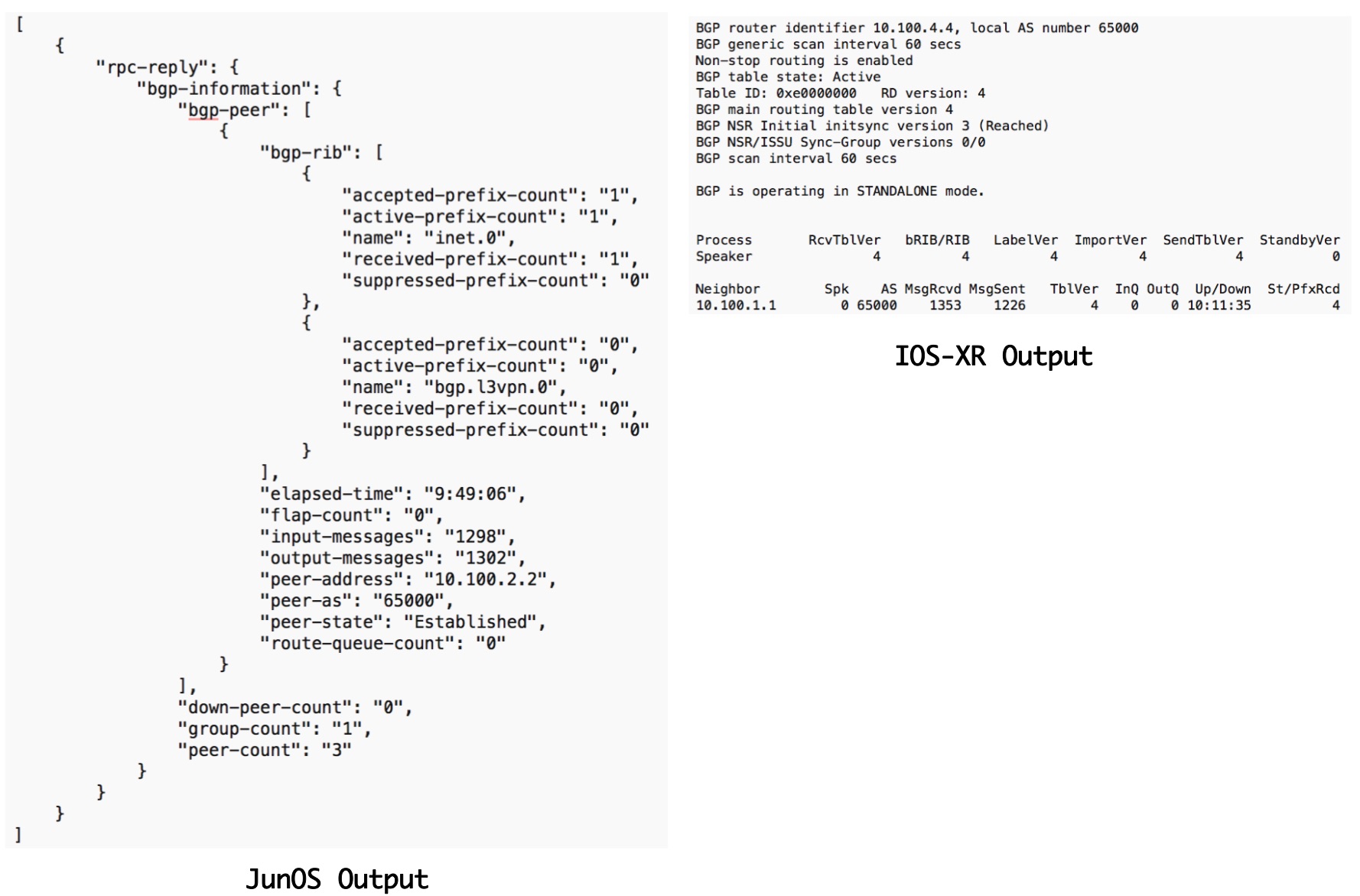

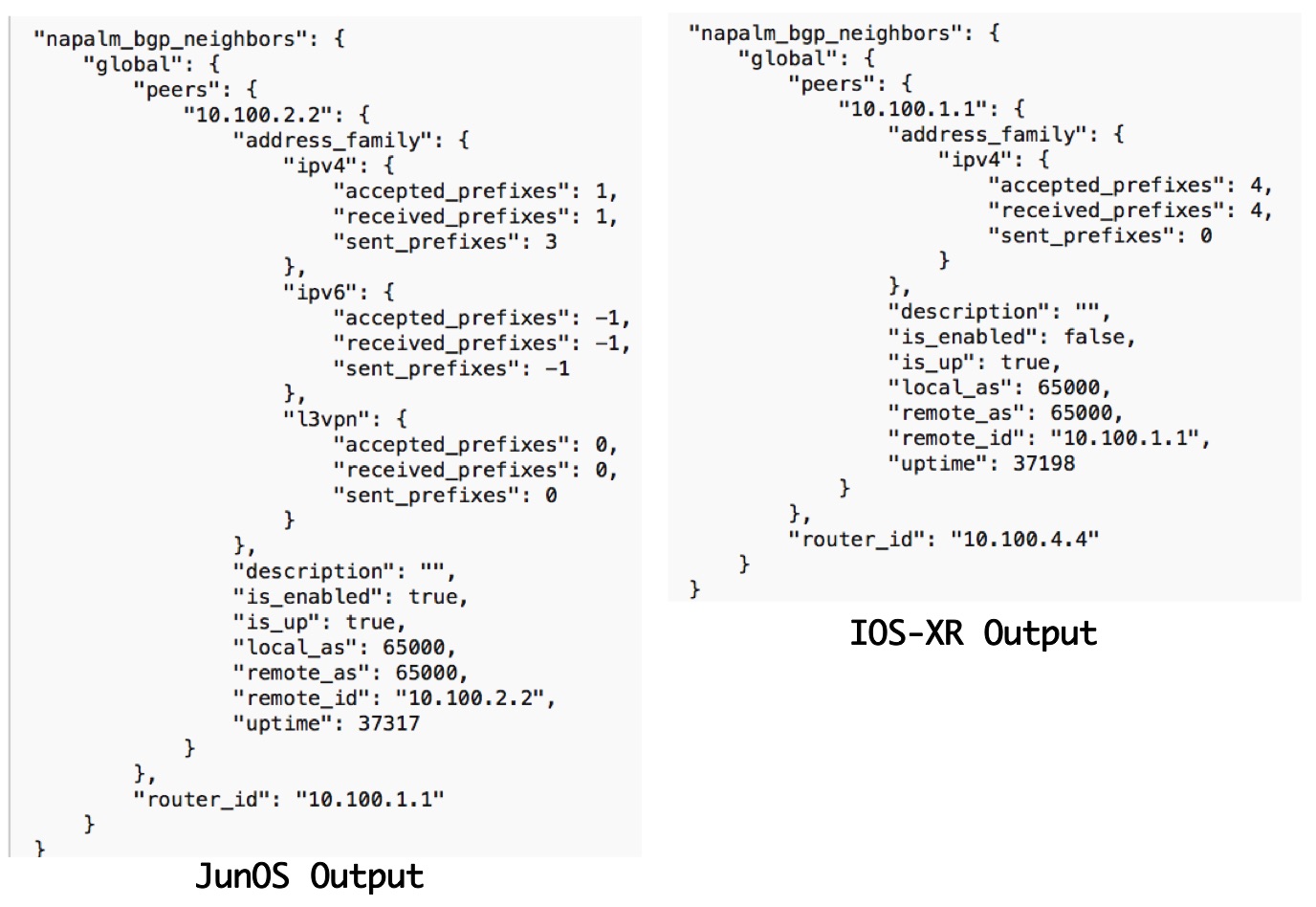

With this still, every network node returns different output (even if structured in XML or JSON) and we have to deal with this differences especially in the returned output from the nodes. The below output illustrates the difference in the output returned from JunOS and IOS-XR when requesting the same data (show bgp summary).

Finally, for some networking equipment (like NX-OS or Junos) further modules are available that are more abstracted in terms of configuring the network devices. So instead of using the default XXX_config module and type the exact configuration commands to describe the required configuration that need to be implemented, new declarative modules are present that just take the variables that you want (like enable interface, configure VLAN) and they transform these high-level commands into device-specific commands that can is sent to the device.

So, Why use Ansible and not python?

Many might argue that whatever Ansible can do, a python script can do and this is true. however, Ansible has many advantages over python for managing networking nodes

- Ansible abstracts many of the difficult python operations in very simple modules (like creating files, directories, starting NETCONF or SSH sessions).

- Ansible handles error conditions and provides very simple knobs to control playbook execution when you encounter errors.

- Ansible seamlessly transforms XML or JSON data into python native data structures (lists and dicts) without dealing with python libraries like lxml or JSON to decode these data.

- When you start running on a large scale, your python scripts need to be multi-threaded in order to execute multiple tasks concurrently on multiple devices, however, Ansible is multi-threaded by nature.

As a conclusion, Ansible is a complete framework that uses python in its core, however it abstracts all the complexity of python to the user and provides easy to use modules to get the job done in the easiest way, thus it lets you concentrate on the important tasks (like configuring nodes or getting output from managed nodes) rather than figuring out how to perform a complex operation in python. However, in order to extend Ansible and implement complex data transformation and manipulation you will need to know python and write python code, nevertheless, 70%-80% of the common tasks in networking can be executed with no python code at all.

What is NAPALM?

As we discussed above, the default Ansible modules to interact with different vendors hardware require different format (junos_command, ios_command) and provide a varying output with a different data structure. So even though that Ansible utilizes the Vendor API to communicate with the target device, in a multivendor environment we need to interact with each API separately in order to manage and get output from these devices, this is the area which NAPALM solves. NAPALM (which stands for Network Automation and Programmability Abstraction Layer with Multivendor support) tries to deliver a common API to manage different vendor OS, it is a python library that utilizes existing python libraries for each vendor (like Juniper Pyez) to communicate with each vendor however, it abstracts these different libraries into a command API libraries which based on the device OS being managed triggers the correct API call to the target node.

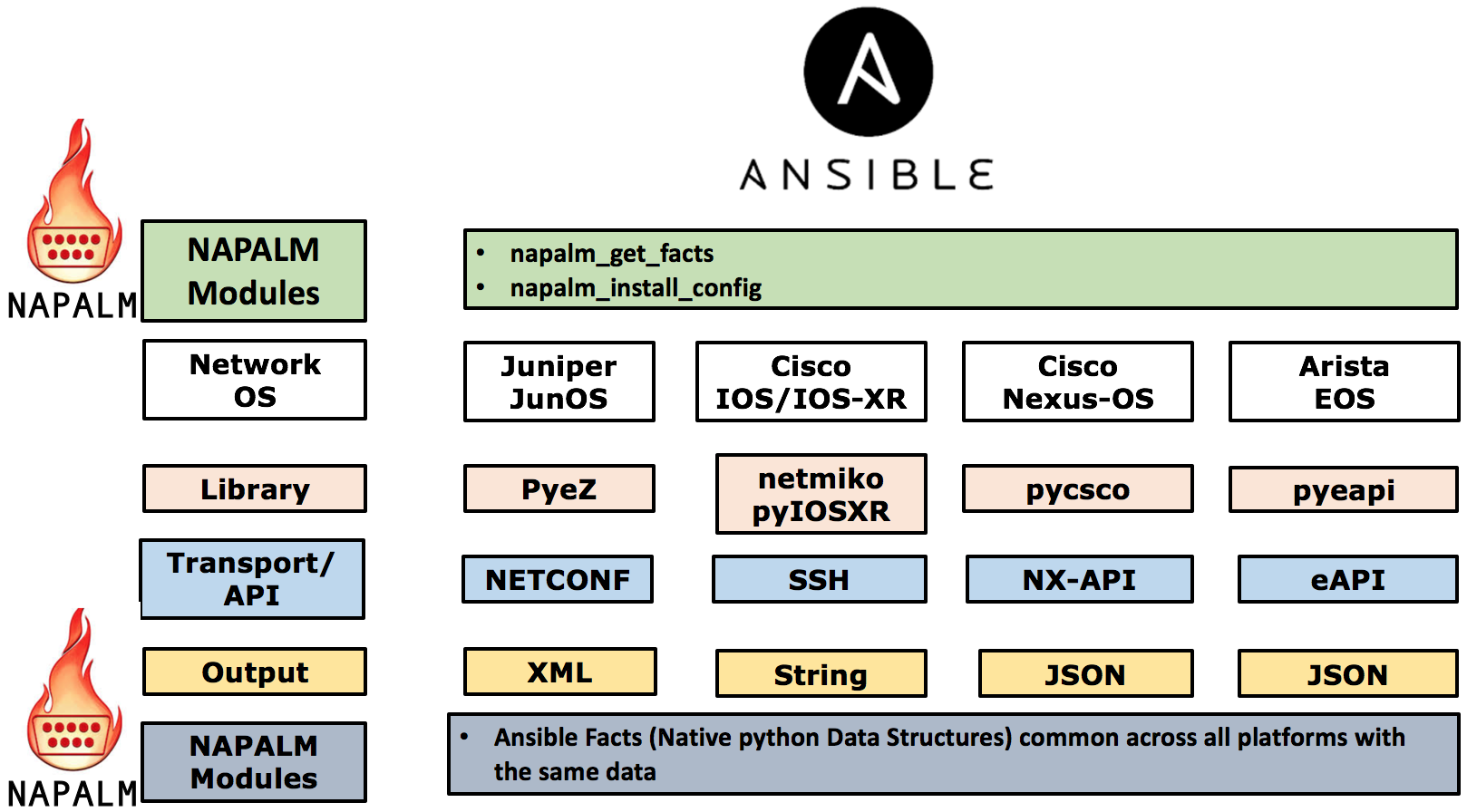

NAPALM is integrated with Ansible and it provides different NAPALM modules to interact with different vendor equipment, below is the most common NAPALM Ansible modules.

- napalm_get_facts, this is used to get different output from the devices and return a common data structure.

- napalm_install_config, this load the configuration into the managed nodes.

The below diagram outlines the interactions between Ansible and NAPALM in a Multi-Vendor Setup.

Further NAPALM emulates operation like compare Config and commit Config which is not available natively by some Networking OS like Cisco-IOS. Thus, it delivers a common API to interact with different vendors Network OS. The below diagram outlines the output of the same operation (get_bgp_neigbors) on both a JunOS and IOS-XR device and returned output, which clearly outlines the benefit of having a command API for interacting with the different Vendor equipment.

In the next article, we will build a sample topology and illustrate how we can generate the configuration using Ansible and JINJA2 templates, and how to push the configuration to the managed nodes using NAPALM.

References

Ansible Networking Modules

http://docs.ansible.com/ansible/latest/list_of_network_modules.html

NAPALM Documentation

https://napalm.readthedocs.io/en/latest/index.html

NAPALM Ansible

https://napalm.readthedocs.io/en/latest/integrations/ansible/modules/index.html

NAPALM Supported Devices

https://napalm.readthedocs.io/en/latest/support/index.html

Great

NAPALM is it open Source or community based . As we all know Ansible since the time is owned by RedHat is growing this way so for enterprise makes more sense for quickly solve issues .

Secondly is NAPALM also agentless or client also need some intellignce ………….

Great article..waiting for the next episode !

Great job Karimo

nice post

Like it !

waiting for part 2 .

Thanks

Good job Karim… Keep it up

Awesome Karim, very helpful!

Thanks a lot Karim, very informative and helpful one.